Cambridge signs up to new AI rules

Cambridge University joins the other 23 Russell Group universities in approving a list of ‘guiding principles’ for the use of AI in higher education

The University of Cambridge has agreed to a new set of guiding principles to help capitalise on the use of AI in university education.

The University, as a member of the Russell Group, has helped draw up these guiding principles to support “ethical and responsible use of generative AI, new technology and software like ChatGPT”, while preserving academic rigour and educating students on the potential risks of AI.

Despite past suggestions that generative AI should be banned from higher education, the new guidelines state that “adapting to the use of generative AI” is “no different” from Universities updating their assessment methods in response to “new research, technological developments and workforce needs”.

As the appropriate use of AI is “likely to differ between academic disciplines”, “universities will encourage academic departments to apply institution-wide policies within their own context” and to “consider how these tools might be applied appropriately for different student groups or those with specific learning needs”.

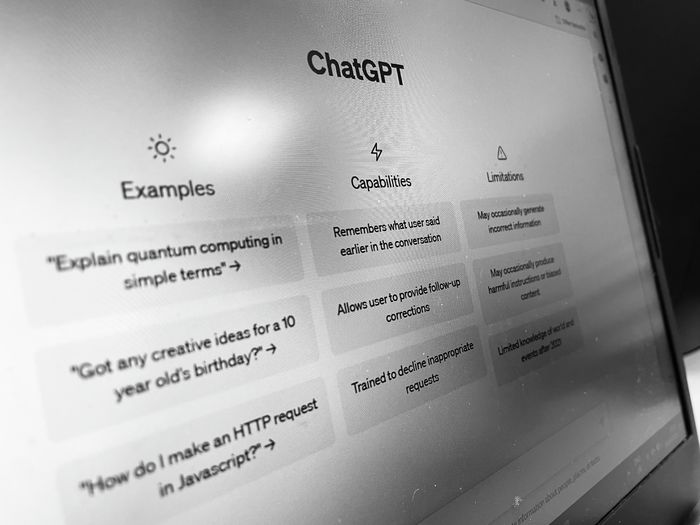

ChatGPT is one example of generative AI. It is an interactive chatbot which uses AI technology to generate text in seconds. It has surprised many with its ability to create fluent and coherent prose in response to a range of user-generated prompts, such as a Varsity article or academic pieces of work, which can be indistinguishable from prose written by a human.

A Varsity survey earlier this year found that almost half of students have used ChatGPT to complete university work, and that STEM students are more likely to use the chatbot for this purpose than humanities students.

The policy shift is motivated by “the transformative opportunity provided by AI”, according to the Chief Executive of the Russell Group, Dr Tim Bradshaw. It aims to “cultivate an environment where students can ask questions about specific cases of their use and discuss the associated challenges openly and without fear of penalisation”.

This echoes comments made by Cambridge’s pro-vice-chancellor for education, Bhaskar Vira, who told Varsity that bans on AI software are not “sensible”. Vira remarked earlier this year that “we have to recognise that [AI] is a tool people will use but then adapt our learning, teaching and examination processes so that we can continue to have integrity while recognising the use of the tool”.

The move is in stark contrast to earlier talk of banning or heavily limiting the use of AI by students, motivated by concerns over cheating.

Exam regulations for certain Tripos papers, such as those set by the faculty of Modern and Medieval Languages and Linguistics this year, explicitly banned the use of chatbots in online assessments. The following was printed: “In the context of a formal University assessment, such as this examination, plagiarism, self-plagiarism, collusion, and the use of content produced by AI platforms such as ChatGPT are all considered forms of academic misconduct which can potentially lead to sanctions under the University’s disciplinary procedures.”

News / Judge Business School advisor resigns over Epstein and Andrew links18 February 2026

News / Judge Business School advisor resigns over Epstein and Andrew links18 February 2026 News / Hundreds of Cambridge academics demand vote on fate of vet course20 February 2026

News / Hundreds of Cambridge academics demand vote on fate of vet course20 February 2026 News / Petition demands University reverse decision on vegan menu20 February 2026

News / Petition demands University reverse decision on vegan menu20 February 2026 News / CUCA members attend Reform rally in London20 February 2026

News / CUCA members attend Reform rally in London20 February 2026 News / Caius students fail to pass Pride flag proposal20 February 2026

News / Caius students fail to pass Pride flag proposal20 February 2026