Is peer review the way forward?

Chloe Li explains the importance of peer review in science, discusses its shortcomings and considers what alternative routes we can take

“Has it been peer reviewed?” This is the question most asked before delving deeper into a research article or switching tabs. Peer review is not only a quality baseline in science but also the bottleneck on publication and funding. Yet for such a cornerstone practice, it itself has attracted less examination, perhaps to science’s detriment.

Peer review filters publication in threefold. First is a desk evaluation: the editor streamlines submissions by deciding which manuscripts receive review. Kept manuscripts are then each assigned to 1-3 peers for stage two evaluation. Criteria for this differ between journals but resemble similar tones. Nature asks for papers that provide “strong evidence for its conclusions”, are “novel” and “of extreme importance to scientists in the specific field”. The editor decides whether the work is admitted based on reviews, which stay anonymous unless reviewers choose otherwise. Finally, work is returned for revision before publication.

Proponents have argued that peer review not only satisfies practical concerns in communication, but an epistemological principle underpinning the scientific method. Modern science as problem-solving relies on conjecture and refutation. As Popper argued, only exposure to rational criticism can eliminate errors. Criticism, and the continuous survival against it, is how knowledge can grow and be deemed objectively true. This is analogous to competitive selection in biological evolution: in scientific evolution, peer review acts as a primary selecting force and journal publication is the main channel of reproduction.

“In scientific evolution, peer review acts as a primary selecting force and journal publication is the main channel of reproduction”

For selection to be effective, the selection criteria must be well-defined and reliable. Whimsical or impartial judgements only stir confusion. To take from information theory, “the enhancement of signal/noise ratio is fraught with the risk of distorting the signal”. Yet, criteria set for reviewers are largely imprecise and open-ended. For judging originality and significance, Nature instructs its reviewers by asking “on a more subjective note, do you feel that the results presented are of immediate interest to many people in your own discipline?” The space for subjective interpretation prompts disagreements between reviewers, who, as shown by Kravitz et al.(2018), “agreed on the disposition of manuscripts at a rate barely exceeding what would be expected by chance”. To illustrate this difficulty by noting the recursive nature of Popper’s logic, refutations are themselves only conjectures. When the standards of weighing scientific values are multivariate and hard to test, it is uncertain whether reviews can be reliable even upon consensus.

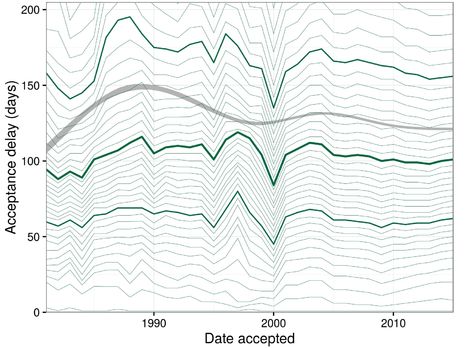

Worse, the burden of proof in refereeing should not be on the author. This is doubly true in that refereeing is first burdensome. Study shows that median review time has teetered around 100 days for the past 30 years, with high impact journals taking longer. This substantially taxes a scientist’s working lifetime and research impetus. A 2018 paper shows that “acceptance rates for the most prestigious journals have fallen below 10% and the labor of rejecting submissions has become these journals’ largest cost item.” But the burden is heavy in another sense. A false rejection is inherently more detrimental than a false idea, as a false idea stands to be falsified but a suppressed idea is lost. This means the impact of unwarranted rejections can be unpredictable.

Conversely, it is perhaps unrealistic to expect the value of new ideas to be obvious. The significance of Mendel’s 1866 paper “Experiments on Plant Hybridization” in elucidating inheritance remained famously undiscovered until 1900. Knowledge grows unexpectedly: implications of seemingly tangential ideas sometimes need long-term exposure to surface. A contributor to this obscurity is the specialisation of expertise. Constant invention of new fields and methods means scientists in narrow or innovative branches are likely peerless. The dawn of a new field often sees the rejection of a paper later proving fundamental, be that Fermi’s theory of beta decay, Krebs’ proposal of the citric acid cycle, or Lynn Margulis’s endosymbiont hypothesis. The small-scaled, anonymous nature of peer review can unconsciously create the effect of censorship, hence it is essential, quoting scientist Malcolm Atkinson, that “referees should not attempt to usurp the function of open scientific debate”.

Expanding research has seen the spawning of a “publish or perish″ culture. Inevitably, journal publication is not just for communication’s sake – it is how academic careers are built. Publishing in a prestigious journal gives one-time, short-run credit without hindsight. The pressure on employers and researchers to prioritise this over long-term performance creates a bottleneck that incentivizes publication bias and data fudging. Undoubtedly, this is a systematic flaw in our academic tradition that contributes to the reproducibility crisis and accrual of false positives. The methodology itself needs reform. (Read about why registered reports should be introduced.)

One proposal from Heesen and Bright (2020) is to switch from pre-publication to post-publication review. Authors can publish freely in a preprint archive-type format similar to arXiv or bioRxiv, which allow peer comments and author follow-ups. Journals will curate based on post-publication reviews, functioning like a prize. Hence, the spread of ideas is not controlled preemptively but amplified post hoc. This is already used in parts of mathematics and physics. Uploading to arXiv instigates immediate worldwide access and responses, hence is often considered publishing. Some influential papers have remained entirely as e-prints, like Grigori Perelman’s proof of the Poincare conjecture: “If anybody is interested in my way of solving the problem, it’s all there–let them go and read about it.” By expediting publication and prolonging review, this switch might better support rapid research turnover and alleviate sways from top journals.

Other experiments have promoted transparent peer review. Launched by Nature Communications in 2016, authors can opt to have their full peer-review record published. Now adopted by 70% of its authors and 9 Nature-branded journals, this has pushed for more accountable decision-making.

Whilst solutions are unresolved, it is clear that peer-review’s theoretical function in science should be challenged from all perspectives concerning practicality, epistemology and culture. As procedural flaws will cascade into scientific research, failure to reform will most likely refrain us from progress.

News / Report suggests Cambridge the hardest place to get a first in the country23 January 2026

News / Report suggests Cambridge the hardest place to get a first in the country23 January 2026 Comment / Cambridge has already become complacent on class23 January 2026

Comment / Cambridge has already become complacent on class23 January 2026 News / Students condemn ‘insidious’ Israel trip23 January 2026

News / Students condemn ‘insidious’ Israel trip23 January 2026 Comment / Gardies and Harvey’s are not the first, and they won’t be the last23 January 2026

Comment / Gardies and Harvey’s are not the first, and they won’t be the last23 January 2026 News / Cambridge ranks in the top ten for every subject area in 202623 January 2026

News / Cambridge ranks in the top ten for every subject area in 202623 January 2026