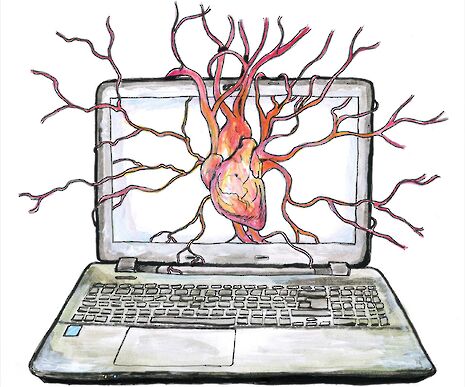

The heart of a computer

Varsity‘s web developer Edwin Balani takes us into the beating heart of a computer – its operating system

To talk about the “heart” of a computer is a fantastically vague topic. Rather, I hope to explain how an operating system — or ‘OS’ for short — tries its best to solve issues of scarcity and competition within a computer. It is the invisible player critical to a computer’s smooth running, and one quite different from the guts that can be found by dissecting the belly of a laptop. When, for example, someone writes a Varsity article while Tom Misch songs play in the background — hypothetically speaking, of course — and experiences no interruption or hold-up from the computer they’re using, they have the OS to thank.

In the beginning of the post-war era, when computers occupied great chunks (or the entirety) of a room, they had only human operators — and the words “operating system” would have fallen on unknowing ears. A computer’s users (say, astrophysics researchers needing to process radio telescope data) would leave a program’s code with the operators; USB memory sticks were still far in the future at this point, and the more conventional format was a stack of punched cards. The operators would load and run each program in turn, and the researcher could return hours or days later to pick up the printed-out results of their program (or, if unlucky, to hear the news that their badly-written program had crashed).

If we were studying the human heart, we would go beyond its four chambers, aorta, vena cava and pulmonary artery & vein, to study more closely the peaks and troughs of an ECG trace, for instance

In jargon, each program to run was a “job”. With time, computers gained features to make the running of jobs easier — for instance, a set of many jobs could be loaded from stacks of cards to one tape, which is then read and executed by the computer as a single batch. What was missing, however, was the ability to run two or more jobs simultaneously, which is sensibly named “multitasking”.

Here we have our first encounter with scarcity and competition at play. In a truth that holds today almost as much as it did half a century ago, true multitasking was impossible: at a basic level, a running job has exclusive use of the computer. Instead, then, the computer must rapidly switch its focus between however many jobs it is juggling. To ask a human to do this would be futile: there was a real need for the computer to handle it entirely by itself.

Early forms of a solution were called “resident monitors”, as they retained snippets of code in the computer’s memory that were executed periodically, to keep track of the jobs that are in progress, completed, and still to be run. What was missing from these systems, however, was the ability to use the computer interactively — meaning that users can ‘log in’ directly, type commands, and see the computer get to work in front of them. This would absolve the operators of having to load the programs in themselves, which was the final piece of tedious manual work involved in the computer’s day-to-day running.

It was around this time that NASA put Buzz Aldrin and Neil Armstrong on the Moon in the Apollo 11 mission; crucial to its success was the Apollo Guidance Computer (AGC). The AGC’s software was built around the same concept of “jobs”: this time, they were hard-coded during the AGC’s manufacture, and the computer could multitask to keep up to seven jobs running at any one time. (The actual code used to program the AGC is openly available online, and contains such affectionately named routines as “BURN, BABY, BURN”.) The AGC was versatile: astronauts could issue commands to run certain programs, to query status information, to check warnings thrown by the computer, and indeed to disable the computer either partially or totally if things went very wrong. All of this human-computer interfacing took place at the DSKY, pronounced ‘diskey’, and at its core it was an interactive manner of using a computer, at a time when many were still batch-processing machines.

Back on Earth, interactive computing emerged in the rise of “time-sharing systems”, around the turn of the 1970s; as the name suggests, these systems still allowed the computer’s resources to be distributed fairly, but this time the users could sit directly at terminals to use it. This was the point at which the term “operating system” started to gain a meaning. However, the terminals were ‘dumb’: they had a keyboard and a display, but were connected by long cables to the computer proper. Keystrokes were sent up the cable to the ‘mainframe’ — a name hinting at the computer’s centralised nature — and the display would simply show whatever text (more complex graphics being a couple of decades away) was sent back down the line.

By this point, computers had to worry little about fair use, since they were tended over by skilled operators. Taking the hands off the wheel and letting ‘users’ loose on the system themselves presents challenges: how to stop any one user from running an intensive program that consumes resources to the detriment of others? After all, an operator could feasibly flick through a stack of punched cards (which often had a human-readable printout of the line of code that the holes spell out) as a ‘sanity check’ before loading it into the computer, and potentially spot any fatal bugs, but this is impossible when the users are loading the programs in directly. A key function of the OS, therefore, is to act as the hardware’s bodyguard in addition to being a reasonably fair allocator, to avoid that hardware from being brought to its knees.

Not only does the heart of the computer — by which I now mean its hardware resources — need protection from being overstressed, but so do running programs need protection from each other. Whereas early computers gave one process (which, roughly speaking, is a running copy of a program) full unfettered access to the entirety of their memory, a multi-tasking system needs to keep the memory for each process separate and protected from others, in what is called “memory segmentation”. To let processes read other processes’ memory (where that memory could hold anything, from banal calculations to private financial data) or, even worse, to write to that memory and silently modify it, would be disastrous. The Spectre and Meltdown vulnerabilities, announced publicly at the very start of 2018, highlight the great risks involved when flaws in a computer break down these security barriers.

These newfound requirements inevitably add complexity to the design of an operating system, and details soon start to become intricate. At this point, if we were studying the human heart, we would go beyond its four chambers, aorta, vena cava and pulmonary artery & vein, to study more closely the peaks and troughs of an ECG trace, for instance. Yet, in a manner mimicking the harmony often seen in the natural world, computer hardware has adapted to give operating systems enhanced abilities. Nowadays, modern processors can handle memory segmentation, for instance. They also provide layered ‘protection rings’: user processes reside in the outermost, least privileged ring, and the OS lives in “ring 0”. I think the latter is a fitting name for what is indeed the very core of the computer.

News / University Council rescinds University Centre membership20 February 2026

News / University Council rescinds University Centre membership20 February 2026 News / Hundreds of Cambridge academics demand vote on fate of vet course20 February 2026

News / Hundreds of Cambridge academics demand vote on fate of vet course20 February 2026 News / Cambridge academics sign open letter criticising research funding changes22 February 2026

News / Cambridge academics sign open letter criticising research funding changes22 February 2026 News / Union cancels event with Sri Lankan politician after Tamil societies express ‘profound outrage’20 February 2026

News / Union cancels event with Sri Lankan politician after Tamil societies express ‘profound outrage’20 February 2026 News / Judge Business School advisor resigns over Epstein and Andrew links18 February 2026

News / Judge Business School advisor resigns over Epstein and Andrew links18 February 2026