Java, Python, and morality: why AI developers should learn ethical code

Julia Hotz looks at the existential risk that AI may pose in the future

On 21st December 1938, in the cold confines of Nazi Germany, two scientists made one of history’s most consequential discoveries to date. And unbeknownst to many, it was an accident.

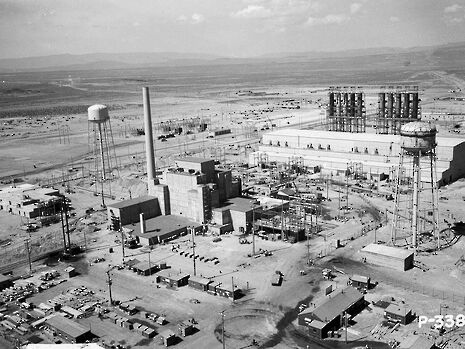

As historian Richard Rhodes explains in his Pulitzer Prize-winning The Making of the Atomic Bomb, radiochemists Otto Hahn and Fritz Strassmann thought that “bombarding” uranium nitrate with neutrons would “probe the atomic nucleus” to produce elements heavier than uranium. Instead, the opposite occurred; “the uranium had split into two” – marking a “fiercely exothermic” reaction, and the final step in unlocking the “enormous energy” long known to be contained in the atom.

Of course, what happened next was hardly an accident; after these findings were published, scientists from Nagasaki to New York quickly replicated them and passed the results on to their respective governments, who would inevitably use it to sponsor the development of the atomic bomb. This prompted the proliferation of nuclear weapons on a global scale, and then, consequentially, a doctrine of ‘mutual assured destruction’, or a promise not to activate such weapons for the sake of all humankind. This fruitless catch-22 continues today – prompting many to wonder why we developed nuclear weapons in the first place.

“The deep things in science are not found because they are useful; they were found because it was possible to find them”

J. Robert Oppenheimer

On one hand, perhaps this is just how science works. As written by Manhattan Project engineer J. Robert Oppenheimer, “The deep things in science are not found because they are useful; they were found because it was possible to find them.” And after all, as Rhodes himself concedes, “If Hahn and Strassman hadn’t discovered nuclear fission in Germany, others would have soon discovered it somewhere else.”

But on the other hand, it’s crucial to understand that Hahn, who had personally experienced the senselessness of violence in the first world war, soon recognised the potential military applications of his findings, and “seriously considered suicide” thereafter.

This begs us to revisit Oppenheimer’s logic: if scientists discover things just because they can be discovered, why begin looking in the first place? Is ‘usefulness’ to society truly irrelevant?

These questions bear particular significance today, as theorists contemplate the potential effects of technology developing at increasingly rapid rates. To no fault of their own, developers—under conditions of Moore’s law, and fierce global competition— are encouraged to develop (or ‘innovate!’) for the sake of development, and are often discouraged from thoughtful contemplation about the long-term consequences of their developments – such as a global pandemic or a catastrophic super-intelligence.

Fortunately, awareness of this phenomenon is gaining global traction, thanks in part to groups such as Cambridge’s own Centre for the Study of Existential Risk (CSER). Pioneered by esteemed astrophysicist Martin Rees, CSER investigates the potential long-term risks induced biology, environmental science and AI, and researches proactive solutions to mitigate such existential concerns. These findings have influenced both the public and private sector – including the recent establishment of AI-ethics units, such as the Partnership on Artificial Intelligence to Benefit People and Society – co-founded by DeepMind, Facebook, Amazon, IBM, and Microsoft.

But when DeepMind co-founder Shane Legg came to campus two weeks ago to discuss the potential AI-induced catastrophes of the distant future, he touched little on the concerns of today, such as the capacity for automation to overtake many of jobs we find complex, fulfilling, and best-suited for humans. When asked about this potential—as confirmed by a widely-cited study from Oxford— Legg countered that “the whole jobs thing is a bit exaggerated” and that even if there were to exist – for instance, a robotised primary school teacher – he would still prefer for his own daughter to have a human instructor.

Even if Legg’s scepticism is justified, the contradiction remains; just like Hahn and Strassman would have probably preferred a world without nuclear weapons, Legg – who has designed some of the most powerful machine learning applications, and whose company motto is to “use [intelligence] to make the world a better place”, claims to prefer human counterparts, at least in certain contexts.

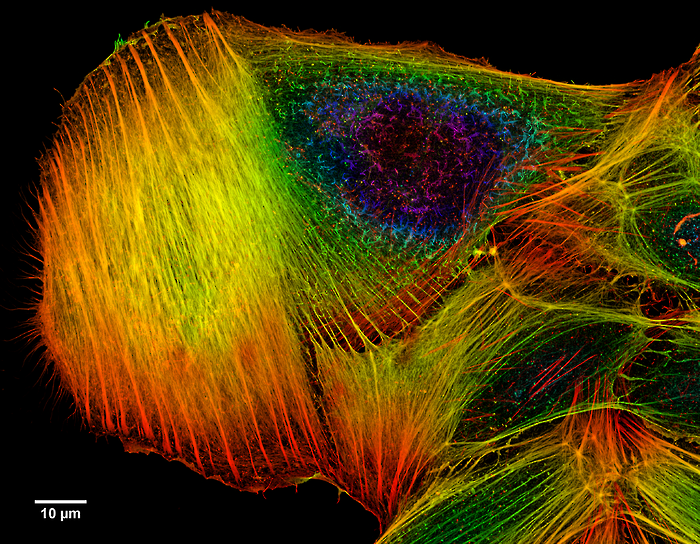

It’s on us, Cambridge, to consider the intersection of science and ethics, critically and collaboratively. As I make a home of this place – passing the iconic Isaac Newton apple tree, pubbing it where Watson and Crick discovered DNA, and (apprehensively) taking classes above the very spot Rutherford pioneered radioactivity, I’m reminded that science has indeed made “my world a better place” in more ways than I can enumerate. And when I talk to my science-studying friends –those researching treatments to cure cancer, or genetically optimising rice production to curb world hunger – I know this trend will only continue.

But I remember, too, that this University boasts names like Bacon and Russell – philosophers who challenged science’s objectivity and direction, and people who believed that codes of science and codes of ethics work best together

News / SU reluctantly registers controversial women’s soc18 December 2025

News / SU reluctantly registers controversial women’s soc18 December 2025 Features / Should I stay or should I go? Cambridge students and alumni reflect on how their memories stay with them15 December 2025

Features / Should I stay or should I go? Cambridge students and alumni reflect on how their memories stay with them15 December 2025 News / Dons warn PM about Vet School closure16 December 2025

News / Dons warn PM about Vet School closure16 December 2025 News / Cambridge study finds students learn better with notes than AI13 December 2025

News / Cambridge study finds students learn better with notes than AI13 December 2025 News / Uni registers controversial new women’s society28 November 2025

News / Uni registers controversial new women’s society28 November 2025