Rising above the misinformation crisis

Jacob Lewis writes about the difficulties in countering misinformation and the various approaches we can take to solve it

In early March, Twitter announced a new “five-strike” system which progressively locks people out and then bans them from their accounts for posting misinformation related to the COVID-19 vaccine. Is this a pragmatic or useful solution to the ongoing problem, which has only worsened during the pandemic?

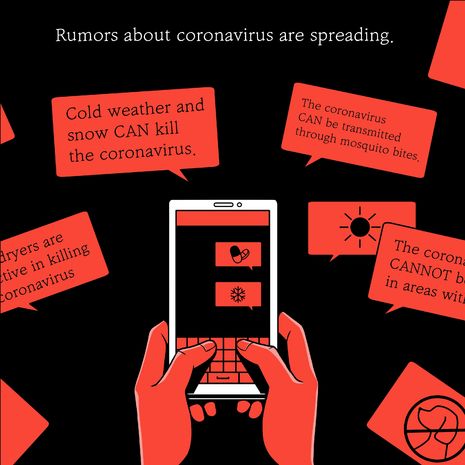

The proliferation of pseudoscientific misinformation is one of the greatest threats to society. Over the last few years, the worrying trend of misinformation spread has been exacerbated by a focus on climate change and the ongoing pandemic - the term “infodemic” has increasingly been used to describe the slew of false or misleading claims that have appeared online since 2020.

One of the key faults with social networks is that they were partially built on the premise that content that a user is most interested in and therefore shown more often is “better” for the user. But as some point out, this does not work in practice because technology doesn’t alter who we are, it only provides an alternative pathway to express human traits performatively; part of our beautiful, terrible condition is the very problem. We have an inherent tendency for bias towards sources shared by people we “trust” in the real world, regardless of the reputation of the initial source. It is this which causes challenges when trying to design a solution to misinformation – you can’t easily change human nature.

A large number of COVID-19 conspiracy theories perpetuated on Facebook or Twitter also have a political subtext; the stark polarisation generated serves to amplify potential misgivings about science. Particularly in the US, there were several theories of China’s deliberate involvement in developing the virus. Memes, both in the informal and technical sense, or “units of cultural transmission”, are especially dangerous in this context as they can easily blur the line between satire and truth. The viewer often has to piece together a few things in order to “get” the joke; this interaction has the effect of drawing them closer to the content. Often they end up resonating with what it’s saying, regardless of whether it was initially satirical or not.

“Technology doesn’t alter who we are, it only provides an alternative pathway to express human traits performatively”

There is certainly an acceptable level of contention within the scientific discourse, without which we might believe science is perfect, and important shifts in consensus might not be initiated. However, the sheer quantity and nature of some content completely misses the mark in terms of providing an alternative viewpoint. It isn’t healthy, it’s dangerous, and it proliferates with the help of millions of unwitting users of social media. Since it is hard to disagree that a significant amount of power over decision-making in science and health policy ultimately rests with the people, the influence misinformation has (and the consequent potential for damage) cannot be understated. There are a number of proposed methods of stopping the spread.

The first, most widely discussed and criticised, is simple censorship. Social media posts are reviewed by fact-checking organisations and removed, possibly along with the account responsible. This can be particularly effective when done to high profile accounts; a 2020 study found that prominent public figures’ misinformation posts accounted for 69% of total social media engagements in the sample used, termed “top-down” misinformation. As well as attracting criticism for supposedly impinging on freedom of speech, this method can be provocative and inadvertently elicit a stronger response from more hardcore members of conspiracy theory groups. One modification is to allow people freedom to post misinformation, but to deliberately limit the spread of such posts to prevent them from spiralling out of control. This was implemented to some extent with WhatsApp, where misleading messages are hard to moderate since chats are not public.

Twitter’s complex strike policy may also go some way to reducing distribution from the source. But the fact remains that accounts are born and die very easily, and it will never be as simple as banning one account, regardless of the number of followers it does cut off from the supply of false claims.

The UK government, having recently published the results of a consultation as a response to the Online Harms white paper, has yet to go forward with legislation to restrict misinformation, and so it is not yet illegal to disseminate it. However, once in place, the law would in theory act as a deterrent and along with the recently resurrected “Don’t Feed the Beast” campaign, cause people to think before posting. In addition, the Digital, Culture, Media and Sport Committee (DSMSC) produced a report last year on the infodemic relating to COVID-19. The government has also enlisted the help of BAME celebrities in fighting misinformation. A television ad attempted to dispel myths surrounding the vaccine (uptake of which has been lower in minority ethnicity communities), such as the idea that it contains meat or will alter human DNA. It could have the opposite effect to high-profile celebrities casting doubt on the benefits of vaccines. However, it has been criticised for being patronising, and it is much easier to spread a myth via social media than it is to dispel one.

Is this enough? Many commentators seem to think we are taking the wrong approach entirely, with alternative suggestions including limits on the time we are exposed to information, and encouraging users to develop cognitive “muscles” in order to critically evaluate information for themselves. Scientists can also make use of the aforementioned trust that people they personally know have in them to combat misinformation.

Ultimately, the solution will have to be more nuanced than any of these alone; a careful combination of informing the public of the dangers, and educating adults and children alike on how to spot misinformation (“prebunking”) will go a long way in tackling posts with which a slightly more critical, educated eye might choose not to engage.

News / CUP announces funding scheme for under-represented academics19 December 2025

News / CUP announces funding scheme for under-represented academics19 December 2025 News / SU reluctantly registers controversial women’s soc18 December 2025

News / SU reluctantly registers controversial women’s soc18 December 2025 News / Cambridge welcomes UK rejoining the Erasmus scheme20 December 2025

News / Cambridge welcomes UK rejoining the Erasmus scheme20 December 2025 Features / Should I stay or should I go? Cambridge students and alumni reflect on how their memories stay with them15 December 2025

Features / Should I stay or should I go? Cambridge students and alumni reflect on how their memories stay with them15 December 2025 Film & TV / Timothée Chalamet and the era-fication of film marketing21 December 2025

Film & TV / Timothée Chalamet and the era-fication of film marketing21 December 2025