A cure for cancer? Big data’s big promise

How intelligent data analysis is transforming oncology

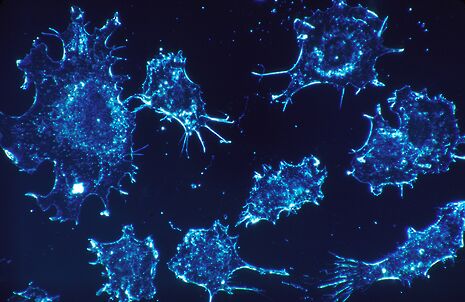

It cannot be denied that cancer is an incredibly complex disease; a single tumour can possess more than 100 billion cells, and each cell has the capacity to acquire mutations individually. Cancer is a dynamic disease, and this constant change must be reflected in our analysis and treatment, a task too vast for a human mind. This is where the disciplines of big data and cancer biology are intersecting, showing potential to work synergistically to transform how we treat the one in two of us who will develop cancer.

Being guided predominantly by what the numbers say with respect to the ‘average patient’ is erroneous because when it comes to cancer, such an individual does not exist.

Tumours are full of data. So many combinations and permutations of genome abnormalities are possible. meaning in effect no two tumours are the same. By allowing researchers not only to scan the genome in its entirety, but to compare genomes of individuals afflicted with specific cancers, and indeed contrast genomic differences between cells of a tumour, big data allows us to learn what exactly has gone wrong particular tumours. Researchers take large data sets, look for patterns, and identify mutations that can be targeted with drug treatment, with a tailored therapy for an individual’s mutational makeup.

Its applications are being studied the world over. Shirley Pepke, a physicist turned computational biologist, responded to her own ovarian cancer diagnosis like a scientist, and sought to fight it using big data. Using the data bank TCGA (The Cancer Genome Atlas), she applied an automated pattern-recognition technique named correlation explanation (CorEx) to evaluate the genetic sequences of 400 ovarian cancer tumours, including hers. CorEx turned up pertinent clues as to Pepke’s tumour’s genetic profile, allowing her to reason that she would be most likely to survive were she administered checkpoint inhibitors, a class of drugs geared to stop the ability of cancer cells to outwit the immune system. Pepke persuaded her doctors, and her cancer was cured.

Such research adds to the growing body of evidence suggesting that a rote statistical approach is no longer sufficient to decide cancer treatment. Being guided predominantly by what the numbers say with respect to the ‘average patient’ is erroneous because when it comes to cancer, such an individual does not exist. Sequencing tumours is now faster and cheaper than ever, with companies offering $1,000 genomes. The challenge for scientists of the 20th century was generating data, but nowadays, refining the processing and analysis methods to deal with so much data has become the limiting factor.

Historically, such ideas about deploying big data to cancer have been strictly academic, but recent years have seen the start of their clinical translation. The I-SPY2 study is the first clinical trial of its kind, collecting real-time genomic information from patients who have been prescribed certain drugs on the basis of their cancer’s ‘tumour signature’. It is anticipated that the data gathered will provide useful insights into biomarkers that improve in response to targeted therapies.

Plenty of challenges lie ahead. Tumours are dynamic entities, hosting a baffling diversity of mutations, which can change over time. Still, there is a reason to be optimistic. Researchers and medics are nudging the field of big data gradually from idealistic speculation to delivery. Hopefully, the patients whose medical histories shape big data’s algorithms may emerge as the biggest winners

News / Judge Business School advisor resigns over Epstein and Andrew links18 February 2026

News / Judge Business School advisor resigns over Epstein and Andrew links18 February 2026 News / Gov grants £36m to Cambridge supercomputer17 February 2026

News / Gov grants £36m to Cambridge supercomputer17 February 2026 News / Hundreds of Cambridge academics demand vote on fate of vet course20 February 2026

News / Hundreds of Cambridge academics demand vote on fate of vet course20 February 2026 News / CUCA members attend Reform rally in London20 February 2026

News / CUCA members attend Reform rally in London20 February 2026 News / Union speakers condemn ‘hateful’ Katie Hopkins speech14 February 2026

News / Union speakers condemn ‘hateful’ Katie Hopkins speech14 February 2026